Chapter 3 Basic Data Analysis in R

Before you go ahead and run the codes in this coursebook, it’s often a good idea to go through some initial setup. Under the Training Objectives section, we’ll outline the syllabus, identify the key objectives and set up expectations for each module. Under the Libraries and Setup section you’ll see some code to initialize our workspace and the libraries we’ll be using for the projects. You may want to make sure that the libraries are installed beforehand by referring back to the packages listed here.

3.1 Preface

3.1.1 Introduction

From the previous course, we have learned how R and its extension Rmarkdown can be a versatile tool to generate a high-quality report in various outputs (PDF, Word, HTML Report, Presentation, etc.) Furthermore, Rmarkdown is distinguished for its ability to incorporate the data analytical process from raw data into charts on the document, without ruining its visual. Proper data analysis will certainly result in high-quality content for your business report. Knowing various data analytical processes in R will greatly enhance our ability to use R for automated reporting, using it to its fullest potential.

3.1.2 Training Objectives

This is the second course of Automate: Business Reporting with R. The objective of this course is to provide participants a comprehensive introduction to understand the basic data analysis in R. We will learn techniques on how to transform raw data into a format that is easier to analyze and using descriptive statistics to explain our data. The syllabus covers:

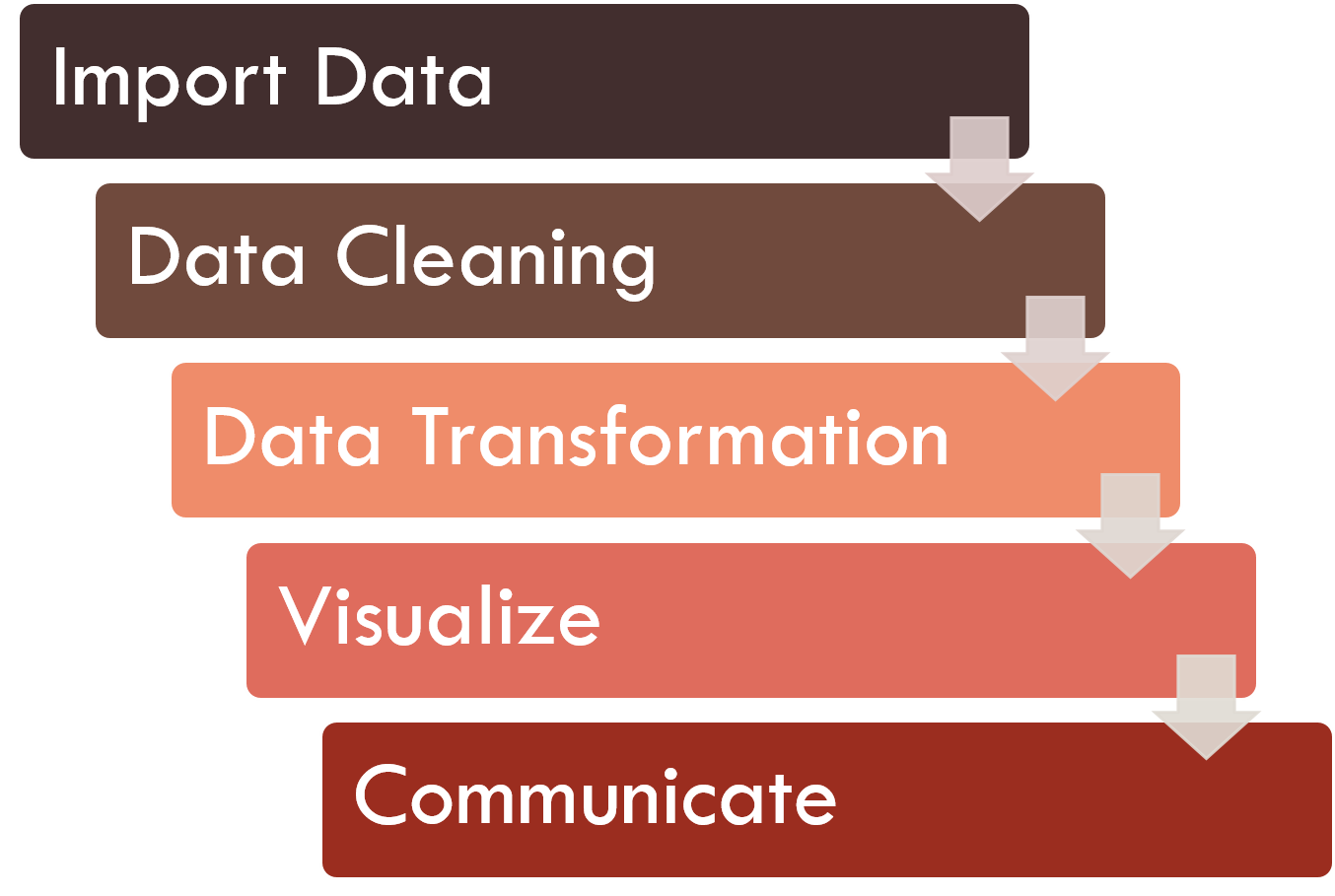

Data Science Workflow

Basic Programming in R

- Object and Environment

- Data Structures in R

- Data Type in R

Tidying Messy Sheets in R

- Importing Dataset

- Data Cleansing

- Data Transformation

3.1.3 Library and Setup

In this Library and Setup section you’ll see some code to initialize our workspace, and the packages we’ll be using for this project.

Packages are collections of R functions, data, and compiled code in a well-defined format. The directory where packages are stored is called the library. R comes with a standard set of packages. Others are available for download and installation. Once installed, they have to be loaded into the session to be used.

You will need to use install.packages() to install any packages that are not yet downloaded onto your machine. To install packages, type the command below on your console then press ENTER.

Then you need to load the package into your workspace using the library() function:

3.2 Data Science Workflow

Data analysis is highly linked with a buzz-word that many people talk about these days that is Data Science. Data Science is a field that focuses on the study and development of how we can analyze data. It compromises various acts of data analysis which aims to transform data into valuable insights.

There is a certain workflow that applies to a data science project. Below is a good chart that explains the workflow of a data science project.

The first step of a data science work is to import our data into our analytics toolset. There are various analytical tools available, but most of the data science work is usually performed using programming languages. In this workshop, we will be using R.

Once we’ve imported our data, we need to clean our data. Data cleaning means to store our data with the correct labels, values, and structure in the way it should be stored. Data cleaning is important because it helps us focus on our questions about the data, instead of struggling to get our data into the right form for different purposes.

Once we have clean data, the next step is to transform it. Data transformation is the process of converting data or information from one format to another. In data science work, it is usually related to narrowing our observations of interest from the rest of the data. This step is important because it helps us focus on our business questions.

As an example is to see communities of people in Jakarta instead of in Indonesia. This is done by creating new data from our dataset by subsetting, summarizing, or adding new variables or information that explain our data.

Once we have transformed our data, we can perform some exploratory data analysis using descriptive statistics and visualize them through charts to improve our understanding of the data. We may also build a machine learning model to predict future values.

The last step of data science work is communication. It is a critical part of any data analysis project. In this workshop, we will communicate our findings on data through business reports using Rmarkdown.

3.3 Basic Programming in R

The first important step in learning data analysis in R is to recognize how R works, and how it works using RStudio- Integrated Development Environment (IDE) for R. First, we should learn about how R and the features in RStudio work.

As an introduction, let’s write our first R code by typing print("Hello!") into the console (by default, it is located at the bottom-left of RStudio) and press ENTER. Another preferred method is to run it in the chunk of R Markdown; see the green button that says “Run Current Chunk”, or press CTRL + SHIFT + ENTER in the chunk:

#> [1] "Hello!"print() is a function in R. Function is code that commands or tells R to do something. In this example, print() tells R to “print” the parameter we put inside the bracket, that is "Hello!".

Also, note the # character before our code on several lines in the chunks above. These characters will be considered as comments, and will not be considered as commands by R. If we run the chunk above, the print("Hello Algoritma!") will be ignored by R

3.3.1 Object and Environment

"Hello!" in the previous chunk, was an object. Objects can be written raw, like the example above, or can be a value that assigned to an object that has a name, using an operator <-:

#> [1] "Programming"After assigning it to an object, we can see a list of the objects we have created in the Environment Pane (by default, located in the upper-right of RStudio). We usually assign our data into an object and further analyze it in R.

It is also important to note that R is case-sensitive; for example, an object “Algoritma” and “algoritma” will be considered different:

#> [1] TRUE#> [1] FALSE# try using object `activity` inside the function `paste()`

paste(activity, "is one of the most therapeutic activities.")#> [1] "Programming is one of the most therapeutic activities."# there is no object named 'Activity';

# therefore the command below will reveals an error

# print(Activity)Every object that has a name will be unique, so if we create an object with the same name, it will replace the old object:

#> [1] "Coding is one of the most therapeutic activities."Notice that the word “Programming” has been replaced by “Coding”.

3.3.2 Data Structures in R

The object named activity that we have created before is one of the basic forms of data structures in R, namely vector. The other data structures that we will study are matrix, and data.frame.

3.3.2.1 Vector

Vector is a 1-dimensional object (meaning that it only has length; not in the form of rows & columns). A vector can be either one of these types:

- character

- numeric

- integer

- logical

A vector can only store values with the same type:

vector1 <- c("Learning", "Data", "Science", "2019")

vector2 <- c(1, 0, 1)

# we can check the data type using `class()`

class(vector1)#> [1] "character"#> [1] "numeric"We use the function c() or ‘concatenate’ to merge two or more values/objects. We will see a lot of this function throughout our courses.

Every object has attributes. Some common attributes that we usually inspect are class, length, dim, colnames, etc.

We can use length() to inspect the length or the total number of values in a vector.

#> [1] 3We will discuss more about dim and colnames later in this course when we talk about 2-dimensional object.

3.3.2.1.1 Character

A character type vector will often be used to work with text data. For example, we have a list of names that we will send an email to:

#> [1] "Samuel Chan" "Tiara Dwiputri" "Ahmad Husain Abdullah"We can use the paste() function to make the sentence “Dear XXX,”, programmatically:

#> [1] "Dear Samuel Chan ," "Dear Tiara Dwiputri ,"

#> [3] "Dear Ahmad Husain Abdullah ,"As we see from the example above, we can process text data by assigning them into character vector. There is also a wide possibility for advanced data processing techniques in text analysis.

3.3.2.1.2 Numeric

A numeric type vector often used for numerical data processing, especially mathematical operations. For example, suppose that we have profit data from the last quarter of our business operations (3 months):

#> [1] 150 87 95We can use mean() to calculate the average of our profits:

#> [1] 110.6667R and its extensions have various functions that we can use to perform various kinds of mathematical operations and more complex statistics.

3.3.2.1.3 Integer

An integer type vector is basically the same as the numeric type vector; however, integer type cannot have numbers behind commas or is not a fraction.

int_vec <- c(0L, 1L, 4L, 7L, 10L)

#`L` is used to prevent R read these data as 'numeric' & label them as 'integer' instead.

int_vec#> [1] 0 1 4 7 10Because integers are basically the same as numeric, we can perform mathematical operations using them.

However, the best practice of using integers is to store index information or information that acts as an identifier, such as employee numbers, ID numbers, etc.

3.3.2.1.4 Logical

A logical type vector is better known as boolean terms in general programming languages. This type of vector has 2 kinds of values (TRUE/FALSE; T/F).

This type of vector is usually obtained from other operations, rather than being made by initially. For example, when we have data related to customers that are eligible/not to be given a promo based on their loyalty point:

If for example, the minimum points required to get a promo is 100 (cust_promo >= 100), we can use function ifelse() to classify whether a customer will be given a promo or not:

#> [1] TRUE FALSE TRUE TRUE3.3.2.1.5 Other Data Types: Factor & Date

During data processing in R, we will often use several more advanced data types, namely factor and date.

Factor

Factor is a character type vector that has levels or categorical values. Each level can appear more than once in a given vector and therefore have frequency.

fctr <- c("Type A", "Type A", "Type B", "Type A", "Type C", "Type C")

fctr <- as.factor(fctr) # converting character-type vector into factor

fctr#> [1] Type A Type A Type B Type A Type C Type C

#> Levels: Type A Type B Type CDate

Date is a special data type that contains date data in a standardized format.

purchase_date <- c("2019-01-01", "2019-01-01", "2019-01-02")

purchase_date <- as.Date(purchase_date) # converting character-type vector into date (ymd)

purchase_date#> [1] "2019-01-01" "2019-01-01" "2019-01-02"3.3.2.2 Matrix

Matrix is one way to store 2-dimensional data in an object. Same as vectors, a matrix can only store objects with the same type. To make a matrix, we can use the function matrix():

#> [,1] [,2]

#> [1,] 11 14

#> [2,] 12 15

#> [3,] 13 16Notice how the value in a matrix has index [1, 1] - can be read as [row index, column index]. We can access a certain value from a matrix by its index.

#> [1] 11 14#> [1] 13To see the dimension of a matrix, we can use the dim() function and it will return the number of rows and columns in the matrix.

#> [1] 3 2We can also retrieve or set the column names of a matrix-like object by using colnames(), while for the row names using rownames().

3.3.2.3 Data Frame

During our time processing data, we will be frequently faced with data in the form of tables, such as the results of a survey of each employee’s activities or the recapitulation of sales from each store branch. Data in the form of table is also known as data.frame. Similar to matrix, it has 2 dimensions namely rows–of observations–and columns–of variables, but it can store different data types.

For example, we can create a data.frame using a function data.frame():

categories_df <- data.frame(categories = c("OfficeSupplies", "Computers", "Packaging"), category_id = 111:113)

categories_df#> categories category_id

#> 1 OfficeSupplies 111

#> 2 Computers 112

#> 3 Packaging 113In this course, we will be focusing more on this data type.

3.4 Tidying Messy Sheets in R

We are now going to learn techniques on how to transform raw data into a format that is easier for analysis. R provides various functions that we can use to process our dataset; from importing datasets to complex aggregations. These functions are compiled in a package. To further learn about packages, you can see Library and Setup section.

Along with the development of R, we can now use several packages that are currently considered as best practices for processing data in R. These packages are included in tidyverse package. Additionally, as most workers use Microsoft Excel to store their data, we will also use readxl package to import an excel spreadsheet into R.

To load packages into our R session, type:

3.4.1 Importing Dataset

R can read data from various formats. For example, by default R has provided a function to import data in comma-separated value (.csv) format using read.csv(). Meanwhile, data stored in other formats may need additional packages and functions for importing.

In this example, we can use readxl package to import data stored in excel formats (.xlxs, .xls, etc.). We will use “data-attrition.xlsx” (located in /data of our working directory) as our data input and assign it to an object named attrition (for Sheet 1) and survey (for Sheet 2). This data stores employees attrition data and commonly analyzed by the Human Resource Department to know what factors might influence the employee’s decisions on leaving the company.

attrition <- read_excel(path = "data_input/data-attrition.xlsx",

sheet = 1) # sheet index or name

survey <- read_excel(path = "data_input/data-attrition.xlsx",

sheet = "survey")By default, the read_* function will try to detect the data type of each column. We can use the head() to see the first 6 rows of our data to confirm this:

| employee_number | age | gender | department | job_role | job_level | education | education_field | monthly_income | hourly_rate | daily_rate | monthly_rate | percent_salary_hike | performance_rating | standard_hours | over_time | over_18 | marital_status | business_travel | distance_from_home | stock_option_level | num_companies_worked | total_working_years | training_times_last_year | years_at_company | years_in_current_role | years_since_last_promotion | years_with_curr_manager | attrition |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 41 | female | sales | sales_executive | 2 | 2 | life_sciences | 5993 | 94 | 1102 | 19479 | 11 | 3 | 80 | yes | y | single | travel_rarely | 1 | 0 | 8 | 8 | 0 | 6 | 4 | 0 | 5 | yes |

| 2 | 49 | male | research_development | research_scientist | 2 | 1 | life_sciences | 5130 | 61 | 279 | 24907 | 23 | 4 | 80 | no | y | married | travel_frequently | 8 | 1 | 1 | 10 | 3 | 10 | 7 | 1 | 7 | no |

| 4 | 37 | male | research_development | laboratory_technician | 1 | 2 | other | 2090 | 92 | 1373 | 2396 | 15 | 3 | 80 | yes | y | single | travel_rarely | 2 | 0 | 6 | 7 | 3 | 0 | 0 | 0 | 0 | yes |

| 5 | 33 | female | research_development | research_scientist | 1 | 4 | life_sciences | 2909 | 56 | 1392 | 23159 | 11 | 3 | 80 | yes | y | married | travel_frequently | 3 | 0 | 1 | 8 | 3 | 8 | 7 | 3 | 0 | no |

| 7 | 27 | male | research_development | laboratory_technician | 1 | 1 | medical | 3468 | 40 | 591 | 16632 | 12 | 3 | 80 | no | y | married | travel_rarely | 2 | 1 | 9 | 6 | 3 | 2 | 2 | 2 | 2 | no |

| 8 | 32 | male | research_development | laboratory_technician | 1 | 2 | life_sciences | 3068 | 79 | 1005 | 11864 | 13 | 3 | 80 | no | y | single | travel_frequently | 2 | 0 | 0 | 8 | 2 | 7 | 7 | 3 | 6 | no |

| 10 | 59 | female | research_development | laboratory_technician | 1 | 3 | medical | 2670 | 81 | 1324 | 9964 | 20 | 4 | 80 | yes | y | married | travel_rarely | 3 | 3 | 4 | 12 | 3 | 1 | 0 | 0 | 0 | no |

| 11 | 30 | male | research_development | laboratory_technician | 1 | 1 | life_sciences | 2693 | 67 | 1358 | 13335 | 22 | 4 | 80 | no | y | divorced | travel_rarely | 24 | 1 | 1 | 1 | 2 | 1 | 0 | 0 | 0 | no |

| 12 | 38 | male | research_development | manufacturing_director | 3 | 3 | life_sciences | 9526 | 44 | 216 | 8787 | 21 | 4 | 80 | no | y | single | travel_frequently | 23 | 0 | 0 | 10 | 2 | 9 | 7 | 1 | 8 | no |

| 13 | 36 | male | research_development | healthcare_representative | 2 | 3 | medical | 5237 | 94 | 1299 | 16577 | 13 | 3 | 80 | no | y | married | travel_rarely | 27 | 2 | 6 | 17 | 3 | 7 | 7 | 7 | 7 | no |

| employee_number | job_involvement | relationship_satisfaction | environment_satisfaction | work_life_balance | job_satisfaction |

|---|---|---|---|---|---|

| 1 | 3 | 1 | 2 | 1 | 4 |

| 2 | 2 | 4 | 3 | 3 | 2 |

| 4 | 2 | 2 | 4 | 3 | 3 |

| 5 | 3 | 3 | 4 | 3 | 3 |

| 7 | 3 | 4 | 1 | 3 | 2 |

| 8 | 3 | 3 | 4 | 2 | 4 |

| 10 | 4 | 1 | 3 | 2 | 1 |

| 11 | 3 | 2 | 4 | 3 | 3 |

| 12 | 2 | 2 | 4 | 3 | 3 |

| 13 | 3 | 2 | 3 | 2 | 3 |

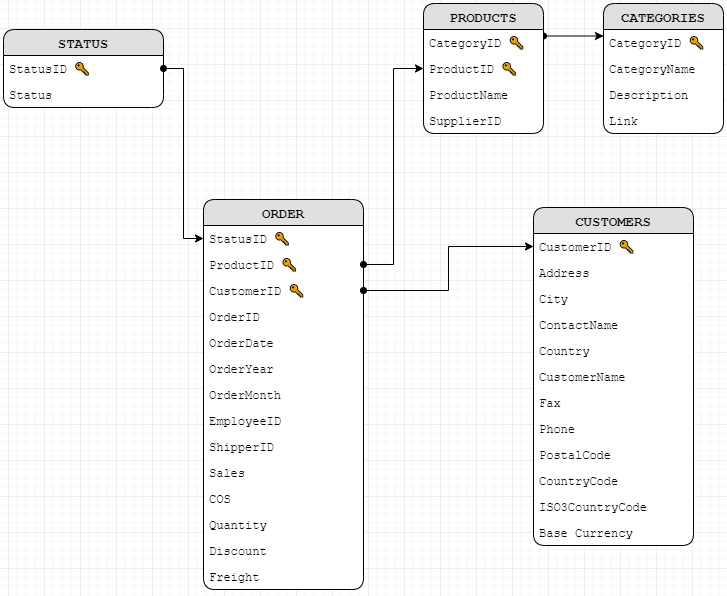

3.4.1.1 Relational Data

In various types of businesses, usually have a lot of data tables that must be combined to answer several business questions that are interested in. Multiple data tables are commonly called relational data because they have relations, not just one single independent data. Here’s an example of relational data looks like:

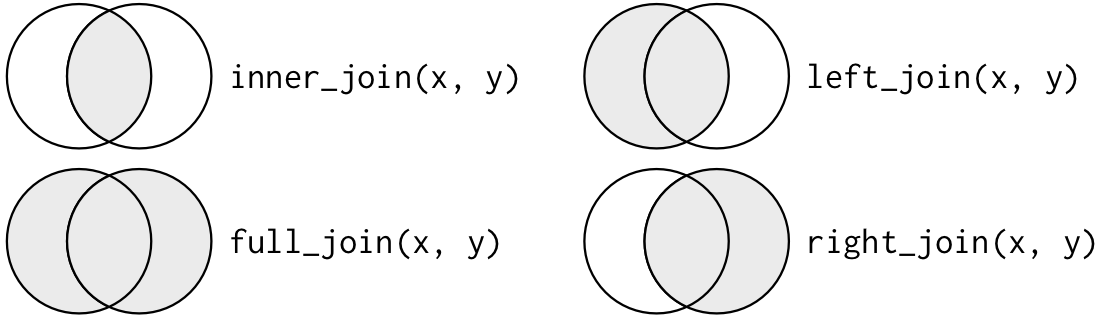

There are two common verbs that are designed to work with relational data: mutating joins and filtering join. But in this section, we will focus more on examples of mutating joins. Lookups to the Introduction to Relational Data for more examples of join data tables.

- Mutating joins adds new variables to one data frame from matching observations in another.

A mutating join allows you to combine variables from two tables. It first matches observations by their keys, then copies across variables from one table to the other. Here are some types of mutating join:

- inner join

- outer join

left_joinright_joinfull_join

Below is an illustration of the different types of joins with a Venn diagram:

3.4.1.1.1 Inner Join

inner_join(x, y): All rows from x where there are matching values in y, and all columns from x and y.

We are going to use this type of join for our data frame.

# joining data frame

data_join <- inner_join(x = attrition, y = survey, by = "employee_number")

head(data_join)| employee_number | age | gender | department | job_role | job_level | education | education_field | monthly_income | hourly_rate | daily_rate | monthly_rate | percent_salary_hike | performance_rating | standard_hours | over_time | over_18 | marital_status | business_travel | distance_from_home | stock_option_level | num_companies_worked | total_working_years | training_times_last_year | years_at_company | years_in_current_role | years_since_last_promotion | years_with_curr_manager | attrition | job_involvement | relationship_satisfaction | environment_satisfaction | work_life_balance | job_satisfaction |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 41 | female | sales | sales_executive | 2 | 2 | life_sciences | 5993 | 94 | 1102 | 19479 | 11 | 3 | 80 | yes | y | single | travel_rarely | 1 | 0 | 8 | 8 | 0 | 6 | 4 | 0 | 5 | yes | 3 | 1 | 2 | 1 | 4 |

| 2 | 49 | male | research_development | research_scientist | 2 | 1 | life_sciences | 5130 | 61 | 279 | 24907 | 23 | 4 | 80 | no | y | married | travel_frequently | 8 | 1 | 1 | 10 | 3 | 10 | 7 | 1 | 7 | no | 2 | 4 | 3 | 3 | 2 |

| 4 | 37 | male | research_development | laboratory_technician | 1 | 2 | other | 2090 | 92 | 1373 | 2396 | 15 | 3 | 80 | yes | y | single | travel_rarely | 2 | 0 | 6 | 7 | 3 | 0 | 0 | 0 | 0 | yes | 2 | 2 | 4 | 3 | 3 |

| 5 | 33 | female | research_development | research_scientist | 1 | 4 | life_sciences | 2909 | 56 | 1392 | 23159 | 11 | 3 | 80 | yes | y | married | travel_frequently | 3 | 0 | 1 | 8 | 3 | 8 | 7 | 3 | 0 | no | 3 | 3 | 4 | 3 | 3 |

| 7 | 27 | male | research_development | laboratory_technician | 1 | 1 | medical | 3468 | 40 | 591 | 16632 | 12 | 3 | 80 | no | y | married | travel_rarely | 2 | 1 | 9 | 6 | 3 | 2 | 2 | 2 | 2 | no | 3 | 4 | 1 | 3 | 2 |

| 8 | 32 | male | research_development | laboratory_technician | 1 | 2 | life_sciences | 3068 | 79 | 1005 | 11864 | 13 | 3 | 80 | no | y | single | travel_frequently | 2 | 0 | 0 | 8 | 2 | 7 | 7 | 3 | 6 | no | 3 | 3 | 4 | 2 | 4 |

3.4.1.1.2 Left Join

left_join(x, y): All rows from x and all columns from x and y. Rows in x with no matches in y will have NA values in the joined data frame.

# generete data dummy

band <- data.frame(name = c("Mick", "John", "Paul"),

band = c("Stones", "Beatles", "Beatles"))

instrument <- data.frame(name = c("John", "Paul", "Keith"),

plays = c("guitar", "bass", "guitar"))

instrument2 <- data.frame(artist = c("John", "Paul", "Keith"),

plays = c("guitar", "bass", "guitar"))| name | band | plays |

|---|---|---|

| Mick | Stones | NA |

| John | Beatles | guitar |

| Paul | Beatles | bass |

3.4.1.1.3 Right Join

right_join(x, y): All rows from y and all columns from x and y. Rows in y with no matches in x will have NA values in the joined data frame.

| name | band | plays |

|---|---|---|

| John | Beatles | guitar |

| Paul | Beatles | bass |

| Keith | NA | guitar |

3.4.1.1.4 Full Join

full_join(x, y): All rows and all columns from both x and y. Where there are non-matching values, returns NA.

| name | band | plays |

|---|---|---|

| Mick | Stones | NA |

| John | Beatles | guitar |

| Paul | Beatles | bass |

| Keith | NA | guitar |

3.4.1.2 [Opt] Working with Database

In real life, data sources are stored in many different ways. Some might have it in CSV files, some have it in excel, some in a server, some are retrieved from API. Some useful skills you might want to put is how to connect to data sources from R. Most of structured databases is stored using a relational database, and SQL is a common DBMS to use. In this example, I’m going to show you how to connect to a remote Microsoft SQL database. Please do note that connecting to a database require different driver, and we will use ODBC connection. In most cases, R is compatible to connect to major databases with available drivers, such as Microsoft SQL Server, SAP, SPSS.

The driver we want to use can be loaded using odbc and some other SQL-related packages. If you are interested to try, let’s start by installing the packages first:

Then call them using library():

Once connected, you can make a chunk that runs an SQL query in it that uses the connection object we just made, or just use it in an R session. This is an example of how to make a connection:

# example of connecting through odbc

cn <- DBI::dbConnect(

odbc::odbc(),

Driver = "SQL Driver Name", # sql driver name as installed in machine

Server = "hostname-or-ip-server", # hostname / IP server

uid = "yourusername", # username

Database = "yourdatabase", # database

PWD = rstudioapi::askForPassword("yourpassword"),

Port = 9999 # your port Number

)3.4.2 Data Cleansing

After importing our data, we can perform data cleansing. These are some criteria that make up a clean data:

Each variable is placed in its own column, each observation in its own row, and each value in its own cell.

This is a very important aspect of clean data. This helps us perform various functions and analysis correctly. An example of this is in the figure below:

Source: Grolemund & Wickham, 2016.

Source: Grolemund & Wickham, 2016.

Standardized column names

R has programming style guides that aim to make R code created by users easier to read, share, and verify. Examples of R programming style guides are Google’s R Style Guide and the Tidyverse Style Guide. Our column names should at least follow this set of style guides. Some examples are naming our columns using lowercase letter and separate it with a dot(.) or underscore(_).

Consistent naming for character-type values (avoids duplicate data)

Numerical values should not contain characters and vice versa

Date-type variables should use either of this format:

ymdorymd-hms

Below is an example of ‘dirty’ data:

| Kode SITC 3 digit | 01-01-2017 | 02-01-2017 | 03-01-2017 | 04-01-2017 | 05-01-2017 | 06-01-2017 | 07-01-2017 | 08-01-2017 |

|---|---|---|---|---|---|---|---|---|

| 001 Live animals other than animals of division 03 | 102.77 | 95.34 | 100.09 | 98.38 | 104.27 | 100.43 | 103.84 | 111.69 |

| 012 Daging lainnya dan sisa daging yang dapat dimakan, segar, dingin atau beku (kecuali daging dan sisa daging yang tidak layak atau tidak cocok untuk konsumsi manusia) | 132 | 161.21 | 115.02 | 108.81 | 107.21 | 99.99 | 111.43 | 102.23 |

| 016 Daging dan sisa daging yang dapat dimakan, asin, dalam air garam, kering atau diasap; tepung dan tepung kasar dari daging atau sisanya | 299.17 | 101.4 | 86.61 | 29.37 | 62.57 | 107.22 | 176.28 | 383.32 |

| 017 Daging dan sisa daging yang dapat dimakan, diolah atau diawetkan, tidak dirinci | 117 | 179.67 | 248.48 | 153.21 | 121.5 | 460.65 | 161.26 | 126.28 |

| 022 Susu dan krim dan produk susu selain mentega atau keju | 96.62 | 129.2 | 98.67 | 100.31 | 107.95 | 91.81 | 94.09 | 91.02 |

| 023 Mentega dan lemak lainnya dan minyak yang berasal dari susu | 2 721.92 | 85.01 | 175.95 | 7 153.54 | 63.33 | 139.16 | 40.26 | 102.77 |

| 024 Keju dan dadih | 74.47 | 105.21 | 61.41 | 85.58 | 20.64 | 88.45 | 97.43 | 93.82 |

| 034 Ikan, segar (hidup atau mati), dingin atau beku | 127.81 | 120.58 | 137.06 | 149.04 | 212.62 | 136.2 | 128.64 | 121.34 |

| 035 Ikan, kering, asin atau dalam air garam; ikan asap (dimasak atau tidak sebelum atau selama proses pengasapan); tepung, tepung kasar dan pelet ikan, layak untuk dikonsumsi manusia | 141.31 | 249.47 | 128.43 | 160.96 | 148.65 | 196.31 | 155.64 | 148.07 |

| 036 Krustasea, moluska dan invertebrata air, berkulit maupun tidak, segar (hidup atau mati), dingin, beku, kering, asin atau dalam air garam; krustasea, berkulit, dikukus atau direbus, dingin atau tidak , beku, kering, asin atau dalam air garam | 157.06 | 301.47 | 109.3 | 181.98 | 173.3 | 135.85 | 127.1 | 360.75 |

This data considered as ‘dirty’ because:

- the variables were placed in rows instead of columns,

- the date-type data assigned as column names (this prevent us from utilizing information related to date)

- the numerical value still labeled as characters (chr),

- the numerical value doesn’t have consistency in writing/storing their data (spaces used as the separator, decimals are not applied to all values, etc.)

On the contrary, here is an example of a ‘clean’ data,

| code | date | export |

|---|---|---|

| 001 Live animals other than animals of division 03 | 2017-01-01 | 102.77 |

| 001 Live animals other than animals of division 03 | 2017-01-02 | 95.34 |

| 001 Live animals other than animals of division 03 | 2017-01-03 | 100.09 |

| 001 Live animals other than animals of division 03 | 2017-01-04 | 98.38 |

| 001 Live animals other than animals of division 03 | 2017-01-05 | 104.27 |

| 001 Live animals other than animals of division 03 | 2017-01-06 | 100.43 |

The above data is already cleaned by changing its data structure using tidyr package. The date information that previously scattered in different columns is now stored in one column date. Additionally, the date information also changed into the correct date data type using lubridate package. These packages are compiled in tidyverse and were simultaneously loaded into our session when we previously loaded tidyverse. Although we will not use all functions and packages provided in tidyverse, we will discuss the basic concept and practices of data cleaning and transformation in R. This understanding will ease your time in exploring many possibilities of data analysis in R in the long run!

Now, let’s look back at our data. We can use the function glimpse() from dplyr package to inspect the structure of our data.

#> Observations: 1,470

#> Variables: 34

#> $ employee_number <dbl> 1, 2, 4, 5, 7, 8, 10, 11, 12, 13, 14, 15, 16, 18, 19, 20,...

#> $ age <dbl> 41, 49, 37, 33, 27, 32, 59, 30, 38, 36, 35, 29, 31, 34, 2...

#> $ gender <chr> "female", "male", "male", "female", "male", "male", "fema...

#> $ department <chr> "sales", "research_development", "research_development", ...

#> $ job_role <chr> "sales_executive", "research_scientist", "laboratory_tech...

#> $ job_level <dbl> 2, 2, 1, 1, 1, 1, 1, 1, 3, 2, 1, 2, 1, 1, 1, 3, 1, 1, 4, ...

#> $ education <dbl> 2, 1, 2, 4, 1, 2, 3, 1, 3, 3, 3, 2, 1, 2, 3, 4, 2, 2, 4, ...

#> $ education_field <chr> "life_sciences", "life_sciences", "other", "life_sciences...

#> $ monthly_income <dbl> 5993, 5130, 2090, 2909, 3468, 3068, 2670, 2693, 9526, 523...

#> $ hourly_rate <dbl> 94, 61, 92, 56, 40, 79, 81, 67, 44, 94, 84, 49, 31, 93, 5...

#> $ daily_rate <dbl> 1102, 279, 1373, 1392, 591, 1005, 1324, 1358, 216, 1299, ...

#> $ monthly_rate <dbl> 19479, 24907, 2396, 23159, 16632, 11864, 9964, 13335, 878...

#> $ percent_salary_hike <dbl> 11, 23, 15, 11, 12, 13, 20, 22, 21, 13, 13, 12, 17, 11, 1...

#> $ performance_rating <dbl> 3, 4, 3, 3, 3, 3, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ...

#> $ standard_hours <dbl> 80, 80, 80, 80, 80, 80, 80, 80, 80, 80, 80, 80, 80, 80, 8...

#> $ over_time <chr> "yes", "no", "yes", "yes", "no", "no", "yes", "no", "no",...

#> $ over_18 <chr> "y", "y", "y", "y", "y", "y", "y", "y", "y", "y", "y", "y...

#> $ marital_status <chr> "single", "married", "single", "married", "married", "sin...

#> $ business_travel <chr> "travel_rarely", "travel_frequently", "travel_rarely", "t...

#> $ distance_from_home <dbl> 1, 8, 2, 3, 2, 2, 3, 24, 23, 27, 16, 15, 26, 19, 24, 21, ...

#> $ stock_option_level <dbl> 0, 1, 0, 0, 1, 0, 3, 1, 0, 2, 1, 0, 1, 1, 0, 1, 2, 2, 0, ...

#> $ num_companies_worked <dbl> 8, 1, 6, 1, 9, 0, 4, 1, 0, 6, 0, 0, 1, 0, 5, 1, 0, 1, 2, ...

#> $ total_working_years <dbl> 8, 10, 7, 8, 6, 8, 12, 1, 10, 17, 6, 10, 5, 3, 6, 10, 7, ...

#> $ training_times_last_year <dbl> 0, 3, 3, 3, 3, 2, 3, 2, 2, 3, 5, 3, 1, 2, 4, 1, 5, 2, 3, ...

#> $ years_at_company <dbl> 6, 10, 0, 8, 2, 7, 1, 1, 9, 7, 5, 9, 5, 2, 4, 10, 6, 1, 2...

#> $ years_in_current_role <dbl> 4, 7, 0, 7, 2, 7, 0, 0, 7, 7, 4, 5, 2, 2, 2, 9, 2, 0, 8, ...

#> $ years_since_last_promotion <dbl> 0, 1, 0, 3, 2, 3, 0, 0, 1, 7, 0, 0, 4, 1, 0, 8, 0, 0, 3, ...

#> $ years_with_curr_manager <dbl> 5, 7, 0, 0, 2, 6, 0, 0, 8, 7, 3, 8, 3, 2, 3, 8, 5, 0, 7, ...

#> $ attrition <chr> "yes", "no", "yes", "no", "no", "no", "no", "no", "no", "...

#> $ job_involvement <dbl> 3, 2, 2, 3, 3, 3, 4, 3, 2, 3, 4, 2, 3, 3, 2, 4, 4, 4, 2, ...

#> $ relationship_satisfaction <dbl> 1, 4, 2, 3, 4, 3, 1, 2, 2, 2, 3, 4, 4, 3, 2, 3, 4, 2, 3, ...

#> $ environment_satisfaction <dbl> 2, 3, 4, 4, 1, 4, 3, 4, 4, 3, 1, 4, 1, 2, 3, 2, 1, 4, 1, ...

#> $ work_life_balance <dbl> 1, 3, 3, 3, 3, 2, 2, 3, 3, 2, 3, 3, 2, 3, 3, 3, 2, 2, 3, ...

#> $ job_satisfaction <dbl> 4, 2, 3, 3, 2, 4, 1, 3, 3, 3, 2, 3, 3, 4, 3, 1, 2, 4, 4, ...The result above shown that our data consist of 1,470 rows (observations) and 35 columns (variables) whereas each column has different data types. This kind of information is very helpful for us to understand our data. We should also understand the description of each column to evaluate whether that column has the correct data type and whether it has valuable information or not. While most of the column names are self-explanatory, below are additional descriptions for certain columns:

- attrition: employee’s churn status (yes/no)

- education: employee’s education level (1 = Below College, 2 = College, 3 = Bachelor, 4 = Master, 5 = Doctor)

- employee_count: employee’s count for respective id

- employee_number: employee’s id

- num_companies_worked: number of companies worked at

- stock_option_level: how many company stocks employees can own from the company

From the above result, we can see that our data is quite tidy for its structure. Each variable is placed in its own column, each observation in its own row, and each value in its own cell. Even so, we can also see that some columns are not stored in the correct data type. Column education, maybe better stored as factors for it may not represent a continuous scale but a categorical value. All columns stored as character (chr) should also be stored as factors.

The dplyr package from tidyverse provides various functions for data cleaning such as making/changing columns. Not only that, ‘dplyr’ also provides Grammar of Coding that simplifies our work with codes.

We can change the column data type using the following command and we will breakdown for each steps below.

data_join <- data_join %>%

mutate(education = as.factor(education)) %>%

mutate_if(is.character, as.factor)Breakdown

The first thing we need to do is to understand the grammar of coding using tidyverse. If you see at the end of each command from the chunk above, there is a pipe operator

%>%that allows users to perform chaining operations. In chaining operations, the data on the left side of the%>%will be the data used for the next function/command.The first function we used above, is

mutate(). This function is used to transform a column or to create a new column. In this case, we use ‘mutate()’ to transform ‘education’, ‘job_level’, and ‘stock_option_level’ into factor data type using functionas.factor().There is also function

mutate_if()to transform multiple columns with a certain condition, similar to conditional formatting in Excel. In this case, if it stores a character data type (is.character) then it will be transformed into factor usingas.factor().

Now let’s see the first 6 observations of our data to check whether our data is already clean enough.

| employee_number | age | gender | department | job_role | job_level | education | education_field | monthly_income | hourly_rate | daily_rate | monthly_rate | percent_salary_hike | performance_rating | standard_hours | over_time | over_18 | marital_status | business_travel | distance_from_home | stock_option_level | num_companies_worked | total_working_years | training_times_last_year | years_at_company | years_in_current_role | years_since_last_promotion | years_with_curr_manager | attrition | job_involvement | relationship_satisfaction | environment_satisfaction | work_life_balance | job_satisfaction |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 41 | female | sales | sales_executive | 2 | 2 | life_sciences | 5993 | 94 | 1102 | 19479 | 11 | 3 | 80 | yes | y | single | travel_rarely | 1 | 0 | 8 | 8 | 0 | 6 | 4 | 0 | 5 | yes | 3 | 1 | 2 | 1 | 4 |

| 2 | 49 | male | research_development | research_scientist | 2 | 1 | life_sciences | 5130 | 61 | 279 | 24907 | 23 | 4 | 80 | no | y | married | travel_frequently | 8 | 1 | 1 | 10 | 3 | 10 | 7 | 1 | 7 | no | 2 | 4 | 3 | 3 | 2 |

| 4 | 37 | male | research_development | laboratory_technician | 1 | 2 | other | 2090 | 92 | 1373 | 2396 | 15 | 3 | 80 | yes | y | single | travel_rarely | 2 | 0 | 6 | 7 | 3 | 0 | 0 | 0 | 0 | yes | 2 | 2 | 4 | 3 | 3 |

| 5 | 33 | female | research_development | research_scientist | 1 | 4 | life_sciences | 2909 | 56 | 1392 | 23159 | 11 | 3 | 80 | yes | y | married | travel_frequently | 3 | 0 | 1 | 8 | 3 | 8 | 7 | 3 | 0 | no | 3 | 3 | 4 | 3 | 3 |

| 7 | 27 | male | research_development | laboratory_technician | 1 | 1 | medical | 3468 | 40 | 591 | 16632 | 12 | 3 | 80 | no | y | married | travel_rarely | 2 | 1 | 9 | 6 | 3 | 2 | 2 | 2 | 2 | no | 3 | 4 | 1 | 3 | 2 |

| 8 | 32 | male | research_development | laboratory_technician | 1 | 2 | life_sciences | 3068 | 79 | 1005 | 11864 | 13 | 3 | 80 | no | y | single | travel_frequently | 2 | 0 | 0 | 8 | 2 | 7 | 7 | 3 | 6 | no | 3 | 3 | 4 | 2 | 4 |

| 10 | 59 | female | research_development | laboratory_technician | 1 | 3 | medical | 2670 | 81 | 1324 | 9964 | 20 | 4 | 80 | yes | y | married | travel_rarely | 3 | 3 | 4 | 12 | 3 | 1 | 0 | 0 | 0 | no | 4 | 1 | 3 | 2 | 1 |

| 11 | 30 | male | research_development | laboratory_technician | 1 | 1 | life_sciences | 2693 | 67 | 1358 | 13335 | 22 | 4 | 80 | no | y | divorced | travel_rarely | 24 | 1 | 1 | 1 | 2 | 1 | 0 | 0 | 0 | no | 3 | 2 | 4 | 3 | 3 |

| 12 | 38 | male | research_development | manufacturing_director | 3 | 3 | life_sciences | 9526 | 44 | 216 | 8787 | 21 | 4 | 80 | no | y | single | travel_frequently | 23 | 0 | 0 | 10 | 2 | 9 | 7 | 1 | 8 | no | 2 | 2 | 4 | 3 | 3 |

| 13 | 36 | male | research_development | healthcare_representative | 2 | 3 | medical | 5237 | 94 | 1299 | 16577 | 13 | 3 | 80 | no | y | married | travel_rarely | 27 | 2 | 6 | 17 | 3 | 7 | 7 | 7 | 7 | no | 3 | 2 | 3 | 2 | 3 |

We can see that our attrition data is clean enough and therefore we can continue to the data transformation.

3.4.3 Data Transformation

Data transformation is a process of converting data from one format or structure into another. Data transformation is a useful tool for Explanatory Data Analysis (EDA) which aims to gain insight and improve our understanding of data by looking at a more detailed or narrower perspective, based on our business question. The package dplyr also provides various functions for data transformation such as making/changing columns, subsetting columns/rows, and summarizing data.

To put it into practice, let’s try performing data transformation to our data. Imagine ourselves as an HR staff who wants to evaluate the employee’s attrition status of your company. We would like to know:

Which department gives the highest number of employee attrition?

The following are complete codes that command R to perform the data transformation. We will breakdown each step and explain it in detail.

data_join %>%

group_by(attrition, department) %>%

summarise(count = n()) %>%

ungroup() %>%

filter(attrition == "yes") %>%

select(-attrition) %>%

arrange(desc(count))| department | count |

|---|---|

| research_development | 133 |

| sales | 92 |

| human_resources | 12 |

Breakdown

- In the example above, the first thing we do is creating a summarise of our data using

group_by(),summarise(), andungroup(). The functiongroup_bywill take existing data (columns) and converts it into a ‘grouped table’ where operations are performed ‘by group’. The functionsummarise()will perform a summary statistic calculation, in this case, count by usingn()on the ‘grouped table’ from the previous chaining operation. The functionungroup()remove the grouping condition. These functions are quite similar to the pivot table in Excel.

| attrition | department | count |

|---|---|---|

| no | human_resources | 51 |

| no | research_development | 828 |

| no | sales | 354 |

| yes | human_resources | 12 |

| yes | research_development | 133 |

| yes | sales | 92 |

- The next function is

filter(). We can use this function to subset our row data based on specific conditions. In this case, we filter (only take) every observation or rows from column attrition which have ‘yes’ as it values.

data_join %>%

group_by(attrition, department) %>%

summarise(count = n()) %>%

ungroup() %>%

filter(attrition == "yes")| attrition | department | count |

|---|---|---|

| yes | human_resources | 12 |

| yes | research_development | 133 |

| yes | sales | 92 |

- Next we can use

select()to remove the column attrition from our data.

data_join %>%

group_by(attrition, department) %>%

summarise(count = n()) %>%

ungroup() %>%

filter(attrition == "yes") %>%

select(-attrition)| department | count |

|---|---|

| human_resources | 12 |

| research_development | 133 |

| sales | 92 |

- The final step is to sort our data using

arrange(). To sort it descending, we can use the helper functiondesc():

data_join %>%

group_by(attrition, department) %>%

summarise(count = n()) %>%

ungroup() %>%

filter(attrition == "yes") %>%

select(-attrition) %>%

arrange(desc(count))| department | count |

|---|---|

| research_development | 133 |

| sales | 92 |

| human_resources | 12 |

From the result above, we know that the department which gives the highest number of employees attrition is from Research and Development. This department may need further analysis regarding its employee’s attrition.

The above example shows how we can summarise our full data into a simplified version which only displays the number of leaving employees (attrition = “yes”) per department. Others might prefer to display both staying and leaving employees from all departments but with a more compressed structure. For example, we will use some of the code we made earlier but without filtering.

Instead, we will transform our data into its wider format using function pivot_wider() from tidyr package. This function will separate the values from column ‘attrition’ into 2 different columns and filling it with values from column ‘count’.

data_pivot <- data_join %>%

group_by(attrition, department) %>%

summarise(count = n()) %>%

ungroup() %>%

pivot_wider(names_from = attrition, values_from = count)

data_pivot| department | no | yes |

|---|---|---|

| human_resources | 51 | 12 |

| research_development | 828 | 133 |

| sales | 354 | 92 |

Alternatively, we can transform data from its wide into its long format using pivot_longer():

data_pivot %>%

pivot_longer(cols = c(no, yes), # columns to be joined

names_to = "attrition", # name of new column

values_to = "count") # name of new column (value) | department | attrition | count |

|---|---|---|

| human_resources | no | 51 |

| human_resources | yes | 12 |

| research_development | no | 828 |

| research_development | yes | 133 |

| sales | no | 354 |

| sales | yes | 92 |

There are many more functions and technical details that we can explore further from tidyverse. If you are interested, you can visit the following articles:

Dive Deeper

The grammar of code from ‘dplyr’ is very powerful for Exploratory Data Analysis (EDA). Using attrition data, try to answer the following questions to sharpen your intuition on data analysis using R:

- From the Research and Development department, which job role gives the highest number of employee attrition and what is their average relationship satisfaction? What insight can we take from the result?

- Is there gender inequality in your company? Calculate the percentage of the female and male employees from each department!

You can also create your own question and try to answer it to sharpen your analytical skills!